MIT MEDIA LAB

Presence interfaces

Human-space interaction

Sensing and translate human presence into meaningful experiences

Human presence is an important part of the spatial experience of architecture. Wearables within space, have the potential to translate human presence into useful tools that augment the experience of architecture. By making the invisible, visible, or quantifying presence into meaningful experience, the wearables can become an important part of elevating our experience of space. The following projects examine different investigations that pertain to sensing presence and translating it through interface.

Digipresence: Translating presence into an audio visual feed

Digipresence is a dynamic presence detection device that translates people's presence in a space to an audio and visual feed. The device picks up the wireless signals of devices within a room, and associates a unique audio signal and color visualization to each IP address. This information is then "played" in real time, allowing the user to understand who is in the room at any given moment based on their unique color and sound. You can also play back the soundtrack from the day, while specifying the amount of playback, and adjust how compressed or elongated it should be.

Team: Anish Athalye and Lisa Tacoronte - MIT Media Lab "Design Across Scales" - Professor: Neri Oxman

SEA: Spatial Emotional Awareness Interface

SEA is a spatially aware wearable that uses temperature differentials to allow the user to feel the heat map of their interactions with others in space, real-time. By examining a user’s emotional responses to conversations with people and by providing feedback on a relationship using subtle changes in temperature, SEA allows for more aware interactions between people.

Emotional responses are analyzed and interpreted through the voice and the body. We used a peltier module as the base for the temperature change, and use BLE to get the location of users. An app was developed as well to allow users to get a more detailed view of how their relationships have changed throughout time.

MIT Media Lab | Instructor: Pattie Maes | Human Machine Symbiosis | in collaboration with Laya Anesu, Lucas Cassiano, Anna Fuste, and Nikhita Singh

Gracenote: Converting space to sound

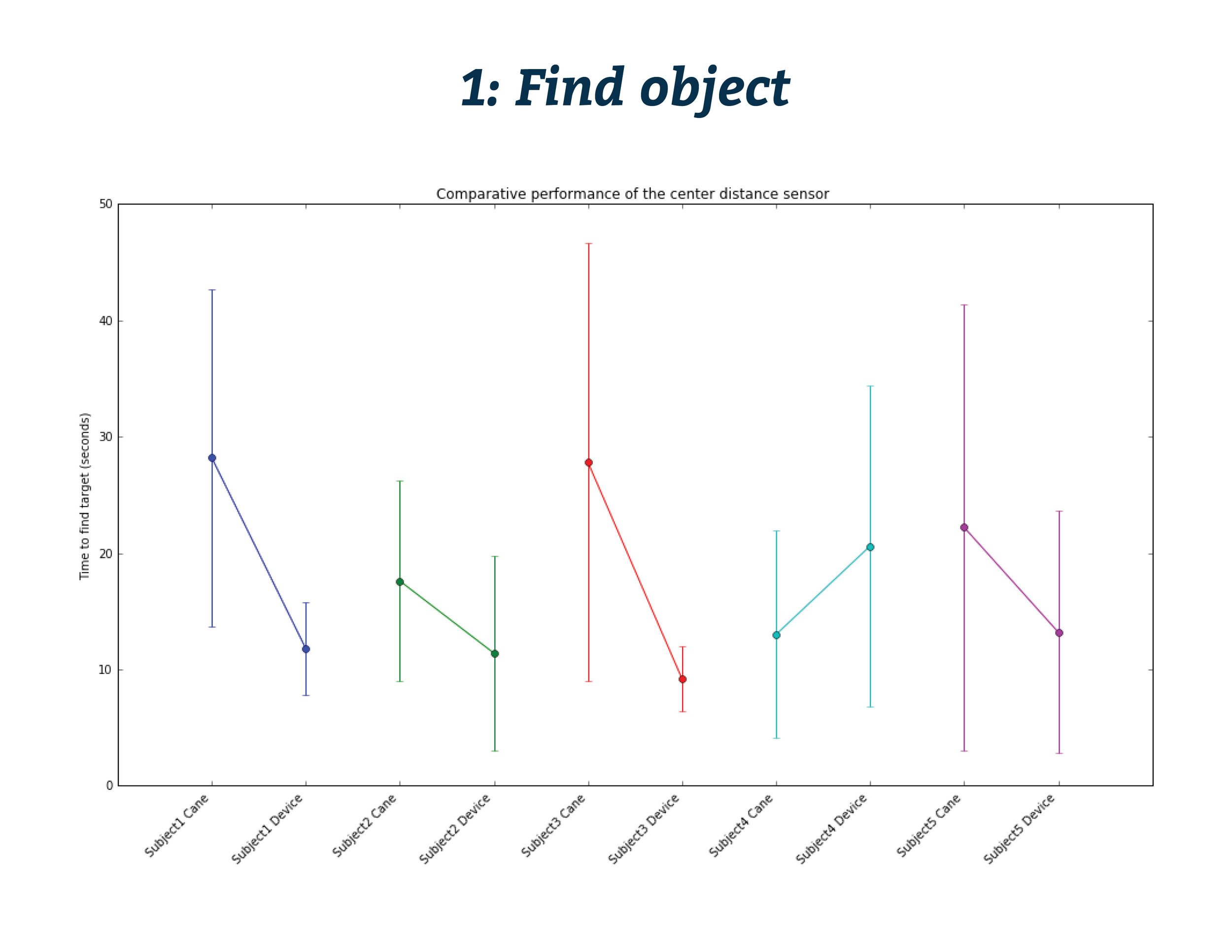

Gracenote is a wearable that allows the augmentation of spatial data to be interpreted through colors of sound chords. Spatial distance data points are recorded through sensors and interpreted as measured music. The ambition of this project was to explore the use of sound for augmenting the stream of spatial information as a replacement for the cane. Specifically, this exploration focused on the situation where the necessary information is out of range of a cane.

Team: Daniel Goodman, Gregory Lubin and Tiffany Kuo - MIT Media Lab, Human 2.0 - Professor: Hugh Herr